This is my idea, I thought. No one knows it like I do. And it’s okay if it’s different, and weird, and maybe a little crazy. I decided to protect it, to care for it. I fed it good food. I worked with it, I played with it. But most of all, I gave it my attention.

“What do you do with an idea” by Kobi Yamaha and Mae Besom.

I’m thinking about writing a web framework. This wouldn’t be my first time.

Why?

- Primarily, because I’ve got an idea, and want to explore it. The quote and photo above from “What Do You Do With An Idea” reminded me that creativity and inspiration are muscles we can train – the more we explore our ideas with curiosity, the more the ideas will keep coming.

- I want to play with code more, try out things for the sake of curiosity and experimentation and be okay with it not necessarily building toward something as part of my day-job.

- I’m a software engineer that enjoys building the thing to build the thing. At Culture Amp, I’m on the “Foundations” team that builds things like the design system and our common tooling, rather than working on the main products.

- Every now and then I want to build a little side project, but get paralyzed – what should I build it with? None of the existing web frameworks I look at appeal to me – I want a single framework for front end and back-end, I want a language with a good compiler, and I want something I can grasp from front-to-back, if there is magic I want it to be magic I understand.

- I’ve learned a lot since I last tried this. In particular, at Culture Amp I’ve been exposed to languages like Elm on the front end and concepts like Event Sourcing / CQRS on the back end. And they’re similar and interconnected and I want to see what it looks like to build a framework that builds on patterns from all of these.

- I’ve enjoyed this kind of project in the past!

(Side note: I’m aware that being in a position where I have the time and money to indulge in this is a sign of incredible privilege… I’m still learning what it means to actively tear down the unfair systems that contribute to that privilege. There are more significant things I can invest my time in for certain. But I’ll save that for another blog post 😅)

What have I tried in the past?

- I once was the main developer (including doing a ground-up rewrite) of a framework called Ufront. It loosely copied MVC.net on the backend, but could compile to several backend languages (thanks to Haxe). The killer feature was that you could re-use code – routing, controllers, views, models, validators – on the front end, and have seemless compiler help when calling backend APIs from the Front End.

To this day I haven’t seen another framework attempt that level of back-end front-end integration, with the possible exception of Meteor. (Admittedly, I haven’t been looking for a while). I feel this tried to be too many things – being a generally useful backend, as well as a front-end integration layer, as well as an ORM, and Auth system, and more. At the end of the day, with the majority of the development coming from me, it didn’t have momentum for such an ambitious scope. I did build two useful education apps with it though! - I also attempted a more tightly scopoed project that never got off the ground, called “Small Universe“. I started this around the time I was interviewing for Culture Amp, and it was heavily influenced by Kevin Yank’s talk “Developer Happiness on the Front End With Elm“. (I now work with Kevin at Culture Amp). The idea was to have a clear data flow for a small “Universal Web App”. The page can trigger actions. Actions get processed on the back end. The back end can generate props for a page. Then the props are used to render a view. Basically, the Elm architecture but with an integrated back end / API layer.

I liked this a lot, and built out quite a prototype, but haven’t touched it in over a year. I like this scope of “small, opinionated framework to give structure to a universal web app”.

The first prototype I built integrated with React on the client, which I think I’d skip this time. I also think I’d go further with the data flow and push for event-sourcing (I didn’t know the terminology at the time, but I’d implemented CQRS without Event Sourcing).

I also liked the name, and think I’ll reuse it! “Small Universe” spoke to it being a framework for “universal web apps”, where code is shared seamlessly between client and server. And also it being “small” – giving you all the building blocks for an entire app in a tight, coherent framework that is easy to build a mental model for.

So am I going to do this?

I think so! I’m interested in having a project, and “working out loud” with blog posts alongside PRs to explore the problems I’m trying to solve and the approaches I’m experimenting with.

I don’t think it’ll necessarily become anything – and there’s a good chance I’ll not follow through because life gets busy (my wife and I are expecting a second child next month 😅) but I’m interested in sharing my initial thoughts and seeing where it goes from there.

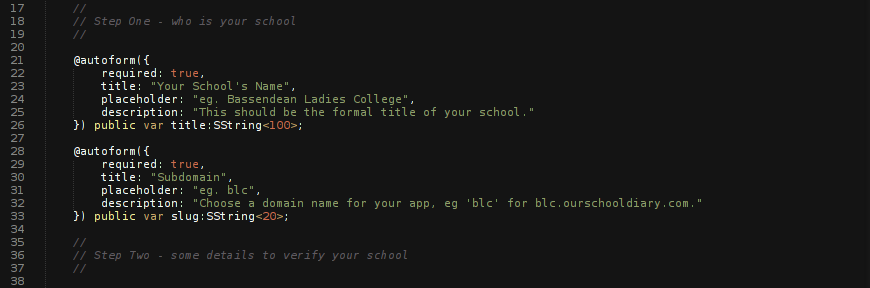

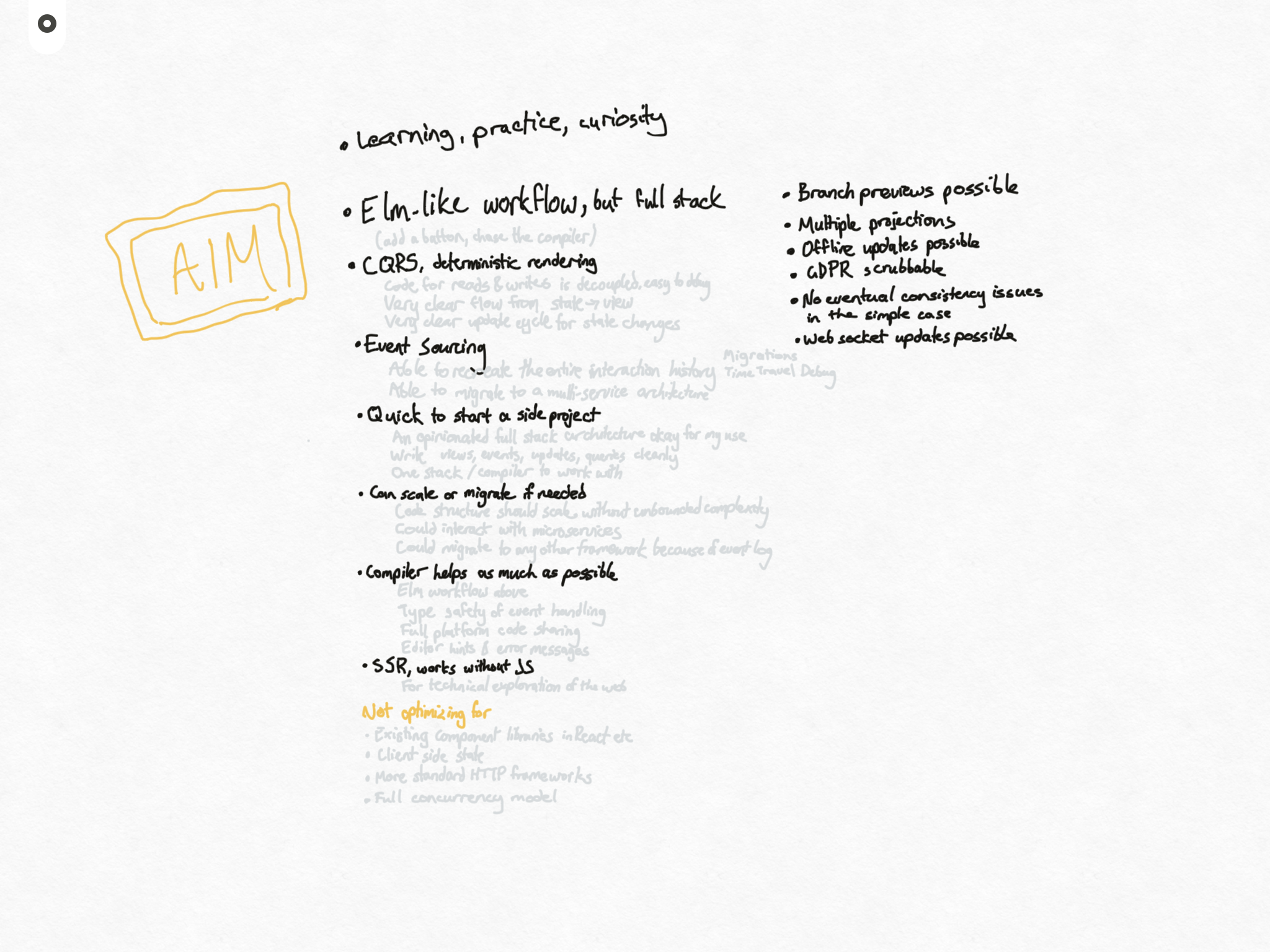

What am I optimizing for?

- My own learning, curiosity and practice. (See “Why” above)

- An Elm like workflow, but full stack. Elm has this great workflow where you can create a new button in your view. The compiler will ask you to define an action for the button. Once you define an action (like `onClick save`) the compiler will ask you to write an “update” handler for when that action occurs. When you do that you’ll write the code to handle the save. You start with the UI, and then the compiler guides you through every step needed to see the feature working. By the time the compiler has run out of things to say, your front end probably works. I want the compiler to provide that experience, with guidance, hints and safety to build features across the front and back end stacks.

- Clear flow of data and logic. Every piece of logic in the app should have its place in the architecture, and it should be easy to find where something belongs. On the back end this looks like CQRS (Command Query Responsibility Separation) – having the code paths that fetch data for a page (Queries) be completely separate to the code paths that change data (Commands). On the front end this looks like separating out state management from the views – the page state should be parsed into a view function. There’s lots to dive into here, but I’ll save it for a future post.

- Start small but keep options open. I want this for myself and side projects. I want something that can start small, and where the entire mental model can fit in my head. But which makes it easy to migrate to a more traditional framework, or a more advanced architecture, if the thing ever grows.

- Keeping open the possibility for a host of technical nice-to-haves:

- Event sourcing.

- Offline client-side usage.

- Multiple projections.

- Server side rendering.

- GDPR “right to be forgotten”.

- Using Web Sockets for speedy interface updates.

- Ability to have “branch previews” so you can see what a PR will look like.

- And to call out some trade offs:

- I’m not aiming for compatibility with React or other view layers. I think the idea I’m chasing handles data flow differently enough that it’s not worth trying to shoe-horn existing components.

- I’m not aiming for micro services. For side projects I think a “marvelous monolith” is more sane. If the data is event sourced a future transition to micro services will be easier.

- Not aiming to support multiple back end targets, or front ends. I’ll probably pick just one back end stack to focus on, and focus on the web (not native / mobile).

- Not aiming for optimum performance. If I can write an API signature in a way that can be optimized and parallelized in future, I will, but I’ll probably do some naive implementations up front (such as updating all projections synchronously in the main thread).

- Not aiming to be a general HTTP framework that can handle arbitrary request and response types. There are plenty of good tools for that job.

Let’s begin

So to start off, here’s where I’ll do my work:

- jasononeil/smalluniverse – a new Github repo (completely blank for now)

- this blog – under the “smalluniverse” category